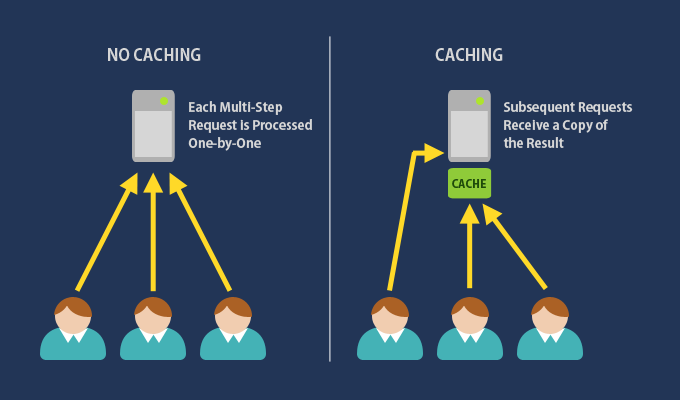

Caching is a technique used to store copies of data in a faster storage location, known as the cache, so that future requests for that data can be served more quickly. By keeping frequently accessed data in a place where it can be retrieved quickly, caching helps reduce latency, improve application performance, and minimize the load on slower data sources like databases or disk storage.

Key Concepts in Caching:

- Cache Types:

- Memory Cache: Stores data in RAM for rapid access. Examples include Redis and Memcached.

- Disk Cache: Stores data on a hard drive or SSD, typically slower than RAM but useful when a large cache is needed.

- Browser Cache: Stores web resources like HTML, CSS, and JavaScript files locally on a user’s device, reducing the need to re-download these resources each time a page is visited.

- CDN Cache: Content Delivery Networks (CDNs) cache web content across multiple servers globally, providing faster access for users in different geographic locations.

- Cache Levels:

- CPU Cache: Small, fast memory located on the CPU to store frequently used instructions and data, helping speed up computational processes.

- Application Cache: Managed by the application itself, storing results from complex computations or database queries to avoid redundant processing.

- Database Cache: Databases like MySQL or PostgreSQL may implement their own internal caching to speed up queries.

- Cache Strategies:

- Write-Through: Data is written to the cache and the underlying storage simultaneously, ensuring that the cache and storage are always in sync.

- Write-Back: Data is written only to the cache initially, with updates to the underlying storage happening later, which helps improve write performance but carries a risk of data loss in case of a crash.

- Read-Through: The cache sits in front of the underlying data store. If data isn’t in the cache, it fetches it from storage and then stores it in the cache for future use.

- Cache-Aside (Lazy Loading): The application directly checks the cache; if the data isn’t there, it retrieves it from storage and then populates the cache. This reduces load on the cache but may lead to cache misses.

- Cache Eviction Policies:

- Least Recently Used (LRU): Removes the least recently accessed data to make space for new entries.

- Least Frequently Used (LFU): Evicts the data accessed the fewest times.

- First In, First Out (FIFO): Evicts data in the order it was added, irrespective of usage patterns.

- Time-to-Live (TTL): Data in the cache expires after a set period of time, ensuring that stale data is not used.

Benefits of Caching:

- Improved Performance:

- Caching speeds up data retrieval by reducing the need to perform expensive computations or access slower storage, which leads to faster application response times.

- Reduced Latency:

- Data in the cache is closer to the application, reducing the time required to access it, especially for network-bound services or web applications.

- Lower Resource Usage:

- By reducing the number of requests to databases or remote servers, caching helps lower resource consumption and operational costs.

- Scalability:

- Offloading requests to the cache reduces the load on backend systems, helping an application handle a larger number of users or requests without degrading performance.

Challenges and Considerations:

- Cache Invalidation:

- Keeping the cache in sync with the underlying data source is challenging. Cache invalidation refers to determining when to remove or update stale data in the cache. Incorrect or outdated cached data can lead to inconsistent application behavior.

- Data Consistency:

- If data is updated in the primary storage but the cache is not updated simultaneously, it can result in consistency issues, especially in distributed systems.

- Cache Miss:

- A cache miss occurs when the requested data is not found in the cache, forcing the system to retrieve it from the slower data source. Frequent cache misses reduce the effectiveness of caching.

- Memory Overhead:

- Caching requires additional memory or storage space, which can become costly if not managed properly. Deciding how much data to cache and where to store it is important for efficiency.

- Complexity:

- Implementing and managing a cache adds extra complexity to the system, especially when choosing eviction policies, maintaining consistency, or handling different caching layers.

Common Caching Use Cases:

- Web Applications:

- Cache static content (HTML, CSS, JavaScript) to reduce load times and server requests.

- Store session data or frequently accessed database queries to minimize repeated queries.

- Database Caching:

- Caching database query results helps speed up frequently accessed data, reducing the load on the database.

- API Caching:

- Responses from APIs can be cached to reduce load and provide faster responses to clients.

- Content Delivery Networks (CDNs):

- CDNs cache static resources across multiple servers worldwide, providing faster access to users and reducing latency.

Caching Best Practices:

- Determine What to Cache:

- Cache only data that is frequently accessed and doesn’t change often. Avoid caching sensitive or constantly changing data.

- Choose an Appropriate Expiry Policy:

- Use TTL to ensure that data is refreshed periodically. Choose an eviction policy based on your use case to prevent memory overflow.

- Monitor Cache Performance:

- Use metrics like cache hit ratio (ratio of cache hits to total requests) to determine cache effectiveness. Adjust your caching strategy based on observed performance.

- Avoid Cache Stampede:

- When data expires, many clients may try to refresh the cache simultaneously, overwhelming the backend. Use techniques like locking or staggered TTL to prevent this.

Caching is a powerful tool to improve the speed and scalability of your applications when implemented thoughtfully. It reduces latency and server load but requires careful design to ensure data consistency and efficient cache usage. Understanding your use case and adjusting caching strategies accordingly can yield significant performance improvements.